Those who follow these letters know how I support a post-material view of the mind-body relationship. Not a rather obsolete Cartesian dualistic metaphysical view, but a more sophisticated non-reductionist and non-physicalist understanding of consciousness, mind, life, and spirit that is not just a philosophical exercise but is determining, and will determine even more in the future, critical decisions concerning AI, policy-making, healthcare, mental health, and investments in science and technology. Our choices are not determined only by the facts on the ground but are also largely influenced by our worldview and, especially, how we see and perceive ourselves. If you accept the idea that the Earth may not be at the center of the universe, several of your choices will be guided by a certain mindset. Conversely, if you are still stuck in a geocentric paradigm, you might not be able to see beyond a very limited horizon, thereby limiting yourself and your development.

That’s the kind of phase where humanity is finding itself with the AI-related modern hype. For example, if we truly believe that we are ultimately not much more than a 1.4 kg wet and slimy brain tissue in our skull, we will tend to have an approach to psychiatry and mental health that may well remain stuck forever in a brain-centric view of ourselves, and that, despite all the claims and noble intents to do otherwise, will not allow us to go beyond strictly pharmaceutical treatment. If we consider the so-called ‘hard problem of consciousness’ an uninteresting philosophical musing or, at best, nothing more than a weekend metaphysical rumination, we might well build and rebuild, generation after generation, a society that remains utterly unable to transition from a crudely materialistic industrial society based on economic exploitation and injustice to a more harmonious, soul-centered conception of life, mind, and Nature. Looking at consciousness, mind, and life from a materialistic perspective has already led us into an unmanageable complexity conundrum that is making the progress of medical science increasingly difficult. A certain kind of gene-centric and neuro-centric view of ourselves has led us to a neuroscience delusion that will have detrimental effects on how we are supposed to treat mental illnesses for the coming generations. You might ask what science has to do with the mystical experience and consider my metaphysical ruminations, such as those on whether reality is an illusion or if we have free will, or asking for the search for the neural correlates of memory as a waste of time or, at best, worth only a bet on the nature of consciousness. But precisely the answers we give to these questions will determine our future. I could also argue how our unaware mind-consciousness conflation, or thinking about the philosophical subtleties of the mind-body interaction problem and the connections between determinism and free will, are, indeed, complicated philosophical musings that, nevertheless, determine our worldview. It is, and will be more than ever, the frame of reference we choose to see the world, and especially ourselves—that is, whether we opt for a materialistic or post-materialistic worldview—that is going to decide which turn humanity will take in the next decades.

But what has all this to do with AI?

We are in the midst of a technological revolution that will determine our future. If we put things in their right context, we will go in one direction; otherwise, we will not.

IIn fact, the question of whether machines may soon become conscious is central. Not because I believe they will, but because the answer to the question determines how we perceive ourselves. Here, my argument is that AI, or whatever kind of artificial general intelligence (AGI), is and will remain an empty (black) box without a soul and without a mind at all. To avoid making a too-long story into an endless essay, let me focus on one particular question: does AI understand anything? Does ChatGPT have a deeper understanding—that is, what I call a ‘semantic awareness’ of what it is doing? Does a self-driving car, which ‘sees’ with its cameras other cars, bicycles, the street, etc., understand what it is ‘seeing’? One might believe that knowing the answers to these questions isn’t of any practical interest because what matters is that the self-driving car drives me safely to the desired destination. But it is precisely this unwillingness to tackle questions of deeper significance that misled a plethora of investors into an enterprise that is still far from meeting expectations and, for the time being, is stalled. My answer is that, unless a car truly understands—that is, it goes beyond syntactic rules and statistical inferences, and also develops a semantic awareness of the environment—it will never be able to drive reliably and reach an automated level V driving. One must understand what one is seeing to be able to move in a complicated environment; otherwise, sooner or later, you will misinterpret a plastic bag for a cyclist or a casual red badge on a wall for a stop sign.

You might reply that modern AI, especially in its incarnation of large language models (LLM), has already shown how machines have at least a partial understanding of texts and their contexts, and maybe, in part, of the world as well. It is only a matter of time before these faculties will be enhanced and will reach human levels and beyond.

Then let’s see what and how it ‘understands’ (whatever that might mean) and, especially, what it does NOT understand. To understand what AI truly understands, it is much more revealing to see what it certainly does not understand (sorry for the wordplay).

We can say that an LLM has some sort of ‘intelligence’—the ability to infer from a large dataset an answer; it can reason logically and deductively much better than a traditional system based on past AI models and, sometimes, even better than humans. However, does it truly understand its own ‘word-crunching’?

Does it have common sense, intuitions, a semantic awareness? While it is able to deduce, it looks much less capable in inductive reasoning and more abstract ‘thinking.’ And does it have creativity, agency, and motivation?

And what about consciousness, sentience, feelings, emotions, self-awareness, the ability to perceive love and hate, pleasure and pain, and finally possess a self and sense of individuality?

Some claim that it is only a matter of scaling up the system—that is, of more computing power, more artificial neurons, more datasets to be trained on, extending it from text to images and videos, embodying it into forms of robots that will collect sensory information, etc., and then, voilà… we will finally get to the AGI humanoid. But this line of reasoning assumes that there is already a glimpse of all these cognitive and psychological aspects present in the machine and that they need only to be amplified and perfected. If so, they will probably soon demonstrate to be right. Whereas, I claim that there is nothing like this in whatever kind of AI system, not even in its embryonic state.

So, let us take up the question of semantic awareness. What follows is a long list of examples that I found elsewhere (for example, from Gary Markus's Substack and Melanie Mitchell's lecture) that nicely illustrate how it is very questionable whether LLMs understand anything.

Let’s begin with the following. ChatGPT was asked the following…

It is clear that it fails to understand the negation (the "‘not-questions’.)

Here is another fascinating example….

The machine "hallucinates." It claims to "see" something that doesn’t exist.

The typical objection is that this doesn’t prove that LLMs or visual language models (VLMs) don’t understand. After all, humans can also hallucinate.

That's true; however, such hallucinations are present only in severe mental illnesses such as schizophrenia, in the last stages of dementia, or under the effects of psychedelics. They are the expression of a less conscious and more mechanical part of us, not an expression of consciousness itself. Ultimately, even the subjects having the hallucinations, in most cases, know that they are having them.1

Despite being trained on large textual datasets, here is an example that shows a lack of understanding of the relationship between names and the numbering of names.

This is not just a lack of semantic awareness about what we are talking about (the items we call ‘states’); it also reflects an inability to understand what one is doing (numbering items).

Only the first sentence of ChatGPT’s answer makes it clear that something went wrong.

That there might be some problem with LLM’s bias is a well-known fact, as the following image further portrays.

The point is that the system does not “refuse” but simply doesn’t understand what it is being asked. Why? Probably because it has been fed images that show only white doctors treating black children, not the other way around. It guesses what the answer could be based on statistical inference, not genuine apprehension and comprehension of meaning. It is all about statistics, not understanding.

Here are some examples from Google DeepMind Gemini generative AI.

It “refuses” to create images of someone writing with their left hand. Why? The answer is simple: the system makes its best guess probabilistically, not semantically. It has been trained on a large number of images where the vast majority of people depicted are right-handed. Therefore, it guesses that the most likely answer must be that of a picture with a right-handed person. It doesn’t understand the question; it only guesses what the statistically most probable answer might be. If it is insistently pressed to furnish a different output, it comes up with images like these…

That there must be something wrong with this image is self-evident. Not so for the machine…

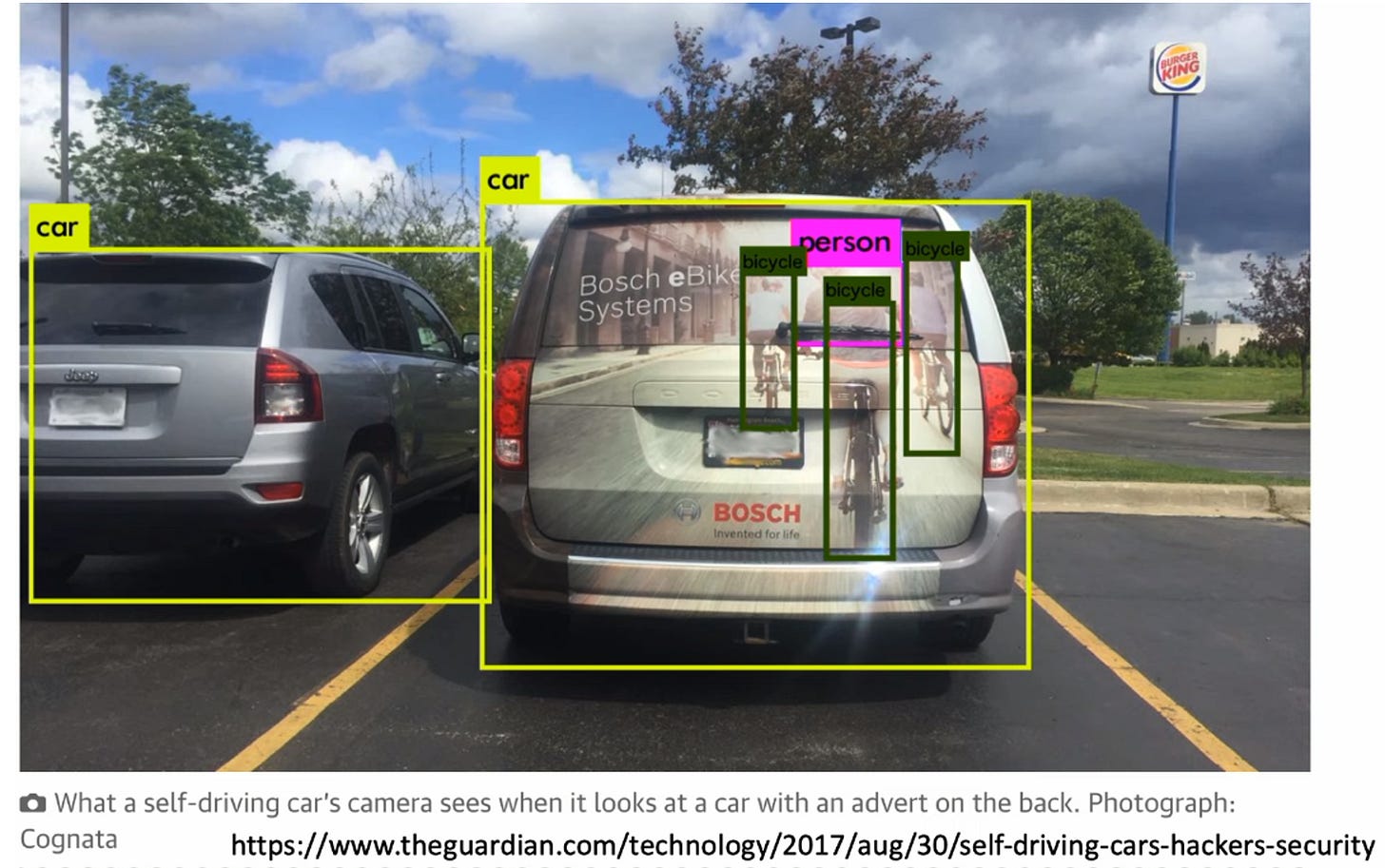

Here is what a self-driving car ‘sees’ when it looks at a car with an advert on the back. The AI’s power to classify objects and people is impressive. It recognizes and distinguishes cars, bicycles, and people from the rest of the environment. But the point is that classifying is not understanding! It does not understand what it is ‘seeing’ and isn’t able to discriminate between real and fictitious patterns.

Here is another example of how a self-driving car completely misinterprets the situation. It saw a cop with a stop sign (in the background on the right) and didn’t understand that it was a picture on a billboard. What this example shows again is that the system has local comprehension but struggles to place it into a larger context with global comprehension.

Here is how a trained neural network recognizes a school bus, which, when presented with different angles and perspectives, makes a mess of it. There seems to be no semantic awareness to distinguish a bus from a punching bag.

Below is a curious video created by Sora, a generative AI that can create videos of up to 60 seconds featuring highly detailed scenes, complex camera motion, and multiple characters, all by asking for it in textual form. The prompt was as follows.

The result….

Apart from the floating chair (yet another ‘hallucination’) notice how one of the persons disappears. There is a problem with objects/person permanence.

The same can be said with ‘wolf pups permanence’….

These were a couple of extracts from Sora. Undoubtedly, Sora’s photorealism is impressive. I guess it will have many creative applications. Writers, artists, and filmmakers will find a gold mine in these kinds of applications. The time when real actors might no longer be needed is nearing. The potential of the coming generative AI tools is virtually infinite.

Of course, at the time of this writing, the above ‘glitches’ and ‘misunderstandings’ may have been fixed. Human intervention might adapt and fine-tune the system by reprogramming it in a way that will make it furnish the right answers. But this also demonstrates the lack of semantic awareness.

Thus, before jumping to conclusions about machines that ‘reason’ and truly ‘understand’ anything, let alone become conscious and self-aware, let us come back to earth and realize that, so far, AI, LLMs, VLMs, or whatever kind of sophisticated artificial intelligence does not understand a thing. As Melanie Mitchell put it: “LLMs have a weird mix of being very smart and very dumb.” I would add that while being smart, there is, nonetheless, nobody or nothing ‘in there’ that has semantic awareness, and even less sentience or feelings. It was and remains a dumb machine that has no will or agency and is, ultimately, only a black box that passively waits for an input. We can scale and magnify present AI systems ad infinitum, but that won’t help. Because zero times a gazillion is still zero.

Comparing AI to living intelligence highlights a crucial difference: it is not just a matter of varying levels of intelligence, but rather the lack of fundamental life qualities. Even the most sophisticated AI systems lack agency, volition, intentionality, desire, autonomy, and goal-directedness. It isn’t clear how much creativity and originality a machine has. For example, there is ongoing debate about whether generative AI can exhibit any creative or original impulse beyond human instructions and the data it has processed.

Ultimately, without input, a chatbot remains a passive entity, taking no action on its own. There is not even a beginning, a spark of agency, and a desire to reach a goal.

Moreover, while humans are fallible—often more so than machines—a fundamental difference exists in how we make mistakes. Humans can become aware of their fallacies by self-reflection and reconsider their actions, deliberate, and review their belief systems. We have the ability to recognize our errors and restart the process to arrive at different conclusions. In contrast, once a machine provides an answer, it never doubts or reconsiders it unless prompted by a human. This lack of active self-reflection stems from the same root as its cognitive limitations: without consciousness, there can be no genuine agency, will, intentionality, or semantic awareness of one’s own conclusions.

I know that in these times of pervasive AI hype, it sounds naive, and my worldview might be seen as old and obsolete. But my claim is that the idea of an AGI or that of humanoids walking on the streets and working for us, making us jobless, will remain a sci-fi fantasy. I’m willing to go so far as to claim that, while self-driving cars may have some limited applications (e.g., robo-taxis driving along pre-established roads), they will not replace humans any time soon either. I guess that in the future we will look back and realize that, while a lot of great achievements have been made that were previously unimaginable, this neuro-centric worldview of intelligence and mind, which is so overwhelmingly present in all AI-related debates, has led us astray in many other respects. Lots of investments will have gone empty-handed, and a certain type of scientific and humanistic progress might be stalled by the refusal to change our paradigm.

At least, hereby I offer you a method to falsify my claims: Let us look backward in, say, ten years, and see how far we got. This post will still be online (unless a stupid AI bot has deleted it for whatever reason), and we can compare predictions with facts.

At any rate, the question remains: What does it mean to ‘really deeply understand’? What is ‘meaning’ after all? Why is this semantic awareness so difficult to achieve in machines? To get closer to the answer, you might like to take a look at the metaphysics of language. It is only by going beyond materialistic thinking toward a post-material concept of mind, life, nature, language, and consciousness that we can find the answer.

I recall how my mother, suffering from dementia, told me, “I see cats everywhere. I know that it is not true. It is the effect of the drugs I take.”

Here’s another useful article on the topic: https://m-cacm.acm.org/magazines/2024/2/279533-talking-about-large-language-models/fulltext