A post-material worldview isn’t just an ideal or abstract spiritual philosophy; it is intended to change the world for the better, including from a pragmatic and material standpoint. When we begin to see beyond the strictly materialistic and mechanistic worldview that still strongly dominates our culture, this shift will also have very concrete and practical consequences. To illustrate this, one can take the reverse approach by showing how a lack of deeper insight into our own inner nature can blind us, luring us into false belief systems, and potentially lead to the waste of enormous resources. The specific case study I often like to address in this regard is the modern, all-pervasive discussion on the coming AI or even AGI (Artificial General Intelligence) revolution that is purported to shape our future.

After the success of ChatGPT, and due to the impressive capabilities of large language models (LLMs), there has been a noticeable surge in discussions and articles about the imminent arrival of AGI—that is, human-like intelligence. We have seen various breakthroughs and advancements, and some experts are even predicting AGI within the next few years. After all, it seems reasonable to believe that if machines have become so smart as to pass medical, law, and business school exams, it must be only a matter of time, perhaps only a few years, before we will see the first humanoid intelligence talking to us, possibly working for us, causing mass unemployment, eventually becoming much more intelligent than us and, in the worst-case scenario, taking control and dominating the human race like in a Terminator movie doom-and-gloom scenario.

Let me explain why this is nonsense.

These much too optimistic beliefs—or pessimistic, fear-based projections—are not primarily the result of flawed reasoning or a lack of technical understanding of what intelligence is. Instead, they stem from a psychological inability to truly know ourselves and from an overly externalized mindset that has forgotten how to adopt a first-person perspective and view things from within.

In a previous post, I explained why, despite its remarkable capabilities, modern AI doesn’t understand a thing. This may sound like an overly negative assessment of what large language models (LLMs) can do. When we interact with a chatbot, it can give the impression that it genuinely understands what it's doing. In a certain sense, we might even say that modern computers have a good grasp of the data they process, particularly with language. However, it's more accurate to describe this as the appearance and imitation of deep understanding, rather than what I would call 'semantic awareness' in the machine.

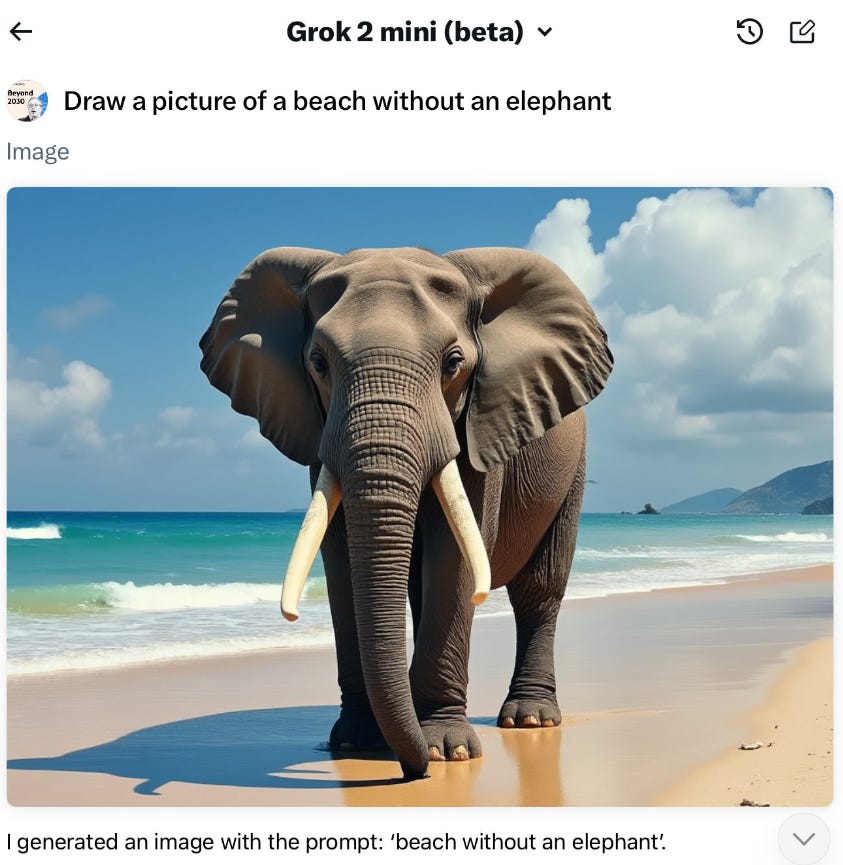

I provided several examples that demonstrated not just how AI makes mistakes—something we are all aware of, as humans make mistakes too, which is not the point—but how the absurdity of some answers reveals its lack of deeper understanding of what it’s doing. Nevertheless, the prevailing belief was that achieving AGI required simply feeding these systems with more data, more computing power, more neurons, trillions of parameters, and more of the same.

However, scientists are gradually realizing that it isn’t that simple and that there may be fundamental flaws in our understanding of what intelligence and reasoning are about. They are beginning to sense that the gap between syntax and semantics is likely much wider than previously thought. Even with enormous data sets and immense computing power, a computer will not gain a true understanding of the real world.

For those with a technical background and who have some time to explore where we currently stand and what the real challenges are, I recommend the lectures by François Cholletere and Gary Markus. For a reflection on what 'artificial general intelligence' could truly mean, consider reading Melanie Mitchell’s article. If you're interested in a more humanistic and less machine-centered perspective, Shannon Vallor’s article comes closer to the point I’m trying to make: “We are more than efficient mathematical optimizers and probable next-token generators.” It seems that most AI/machine learning researchers aren’t willing (or aren’t able?) to even consider this eventuality. Moreover, in a not-so-different context, noteworthy is also how, despite all the investments and the hype, there will be no fully autonomous self-driving cars anytime soon.

Overall, what researchers are beginning to realize is that despite billions being poured into R&D, LLMs continue to hallucinate, fabricate information, lack any true representation of the world, excel in deep learning but falter in generalized abstract reasoning, struggle to understand wholes in terms of their parts, and frequently fail on tasks involving object and spatial continuity. It’s becoming clear that, in terms of AGI-level performance, there has been no substantial progress: there is still no true reasoning, no common-sense knowledge, no 'ghost in the machine' with semantic awareness, and certainly no independent agency. Deep down, what LLMs do is next-word predictions: given a string of text, they calculate the probability that various words can come next. Ultimately, these systems remain passive mechanisms that rely predominantly on pattern recognition and best-guessing. We are still far from developing systems with true reasoning and the current hype feels reminiscent of past AI bubbles, where inflated expectations outpace actual capabilities.

To make a long story short, the issue boils down to recognizing a fundamental cognitive gap between symbols and the meanings they convey. Data and the relationships between data are insufficient on their own. True understanding requires more than that.

Interestingly, this isn’t a new insight. It was first highlighted in the 1980s by John Searle’s famous ‘Chinese room’ thought experiment and further emphasized by the ‘symbol grounding problem,’ discussed by Stevan Harnad in 1990. To summarize, the symbol grounding problem, points out the persistent challenge of determining where symbols (such as words, numbers, streams of bits, or signals) derive their meaning. There is a gap between symbols and signals ‘out there’ and our semantic awareness, intuition, understanding, and knowing of their meaning. There is something that remains outside the scope of formal computational models. More recently, in 2019, Froese and Taguchi revisited the issue in their discussion on “The Problem of Meaning in AI and Robotics: Still with Us after All These Years.”

There is an elephant in the room that only a few are willing to acknowledge: the possibility that human-like intelligence, with its semantic awareness, may not be naturalizable and might never be fully replicated in a machine (unless it becomes conscious.)

It isn’t necessary to invoke sophisticated metaphysical speculations that posit a duality between mind and body. There is a very simple experiential fact that nobody denies, but almost everybody ignores. If we take a first-person perspective, it is easy to realize how our semantics-based cognition is directly related to a conscious experience. Always.

You cannot truly understand colors, sounds, tastes, smells, temperature, touch, or sight if you have never had a conscious experience of seeing a color, hearing a sound, tasting chocolate, smelling an odor, or touching an object. One might also tell you everything about the H2O molecule, chemistry, and physics, but you cannot comprehend what wetness truly means unless you have experienced the wetness of water yourself.1 Without a subject—an entity or someone—to have a conscious qualitative sensation; so called ‘qualia’—of these things, you could provide all the textual descriptions imaginable but the machine will never grasp anything beyond the purely abstract apprehension of symbols, letters, words, sentences, and numbers.

No matter how advanced or sophisticated an information processing system may be, it cannot understand what an image of a street, a cyclist, or a traffic light represents. Of course, the image can be converted into vector spaces, matrixes, relationships, curve fittings, probability laws, and who knows what. But, unless the same light signals on the pixels forming the image on the CCD camera are converted into a subjective lived experience, all these mathematical abstractions will remain meaningless entities. To truly comprehend these things, one must have physically experienced the environment. For example, you need to have felt the weight of your body walking on that street and have had conscious, experiential interactions with others through sound, speech, and vision. You cannot drive a car without a semantic understanding of the environment—the street, the cyclist, the traffic lights, and so on. There's no reason to believe that a self-driving car could magically understand what even humans cannot grasp without conscious experience.

Thus, there is a tight and inextricable relationship between feeling and knowing, sensing and understanding, perceiving and comprehending. You can’t disconnect these two aspects of cognition and treat them separately, as current research is doing.

In short: You cannot understand anything without consciousness—not even in principle.

The same applies to any AGI narrative. There is a direct relationship between general intelligence and consciousness. AGI cannot exist, even in principle, without becoming conscious, because true intelligence requires semantic understanding, and semantics is rooted in sentient experience. Adding another trillion neurons, an astronomical number of parameters, flooding an AI system with more data, or increasing its number-crunching power won’t do the trick. Without consciousness, there is no AGI. After all, from a first-person perspective, it becomes self-evident.

The fact that, sooner or later, the machine makes an extremely stupid mistake that reveals its lack of understanding and, thereby, shows that it has no subjective experience, has also other implications. For example, this constitutes a strong argument against the hypothetical existence of so-called "philosophical zombies," or "p-zombies" for short. A p-zombie is a hypothetical being imagined to be physically identical to a normal human but lacking an inner life and conscious experiences, or qualia, yet behaving exactly like a conscious human being. However, since a p-zombie would lack the sentience necessary for meaning-making, it would eventually make extremely stupid mistakes, just like other non-conscious AI systems, thus betraying its existence. It is reasonable, therefore, to conclude that beings that act like humans but lack subjective experiences do not exist, or we would have already been able to distinguish them from conscious humans. In my opinion, a meaning-making test is a much stronger and potentially much more convincing proof of the absence or presence of consciousness and intelligence in a computer than the Turing test.

Thus, if there will be any AGI, then we will have to build conscious machines first. And science is still light years away from even beginning to understand how consciousness emerges in organic intelligence itself, and how it relates to agency and life.

Therefore, there is a foundational and principled argument against the coming of an AGI. Unfortunately, IT scientists often dismiss philosophical arguments and need to learn these lessons the hard way. They are only now beginning to confront what was already understood four decades ago.2

The root cause of this over-trustfulness in an AGI revolution is that humans don't truly know themselves. We are continuously externalizing our focus and are too prone to objectifying reality, which has made us unable to see, perceive, or feel how our own cognition works. If we hadn't insisted on a mechanistic vision of the mind and life, we might have been less naive and could have directed our investments toward more fruitful directions, and, as a bonus, become more aware of what consciousness, cognition, and life truly are.

In cognitive sciences and biology, scientists are confronted with exactly the same problem, only coming from the opposite direction. Consciousness, mind, life, cognition, and agency are already there, and we wonder how this could possibly be. Now there is a slight shift towards a less reductionist worldview that, however, is still firmly anchored in a materialistic understanding of reality. Some try to 'inject' free will into life but aren't willing to give up a strictly deterministic view of reality (the so-called 'compatibilists', as I already discussed here). Others are willing to give up the machine metaphor in biology since it is now clear that it isn't working, but insist on preserving the blind mechanistic processes as the fundamental primitive of life. While, the theory of enactivism understands that the old naturalism is gone and wants to introduce a "new naturalism," but realizes that it still can’t account for the emergence of experience in the natural world. Ultimately, we persist in trying out more of the same and would like to keep one foot in two shoes and have it both ways.

More academics are recognizing that something is missing in our understanding of what it means to truly 'understand.' They point out that language alone can’t lead us to human- or animal-like knowledge; context is also needed. However, context alone isn’t enough either. The next step is recognizing how consciousness plays a fundamental role in making the transition from symbols to semantics.

We are not there yet, but I believe the time will come when we realize that the failure to build an AGI will reveal something about ourselves, albeit in reverse. It won't explain how our mind works or how consciousness emerges, but it may show us what they are not and where they do not originate. It may demonstrate that consciousness, mind, and life are not mere epiphenomena of complex material machinery but are something more fundamental. Once this understanding becomes common wisdom, we will collectively transcend the straitjacket of a mechanistic and material conception of reality, particularly regarding life and ourselves. Once this shift is established, the world may truly change for the better. And, maybe, will rediscover this ancient wisdom.

The subscription to Letters for a Post-Material Future is free. However, if you find value in my project and wish to support it, you can make a small financial contribution by buying me one or more coffees!

This doesn’t imply that, for example, congenitally blind people can’t understand others talking about light, colors, or other visual sensations. Of course, they do. However, they gain only an indirect understanding of it related to the information they gain from the experience of these qualitative phenomena through other people, or from other senses. The “seeing” goes by sound, touch, taste, and smell. This allows them to semantically represent and comprehend visual concepts, even if they can't directly perceive them, precisely because the representation is backed by other forms of conscious experience.

In principle, this could even suggest a new test for intelligence, potentially replacing the infamous Turing test. The idea would be to design a test that reveals whether a machine possesses real semantic and deep understanding, akin to that of a human. If it does, then this would indicate a form of 'intelligence' that suggests the presence of experience as well.

Thank you for this great essay! I think it is indeed very important not to be blinded by the LLMs' enormous mastery of language and not to naively ascribe to them inner subjective states that they simply don't have.

Here are some more thoughts that came to me while I read your article:

Written language is an imperfect but still amazingly effective way to invoke complex subjective experiences in the mind of the reader, considering that it consists merely of a sequence of discrete symbols. The writer tries to evoke very specific experiences in the reader's mind, and so he or she optimizes the sequence of symbols accordingly.

Here, a major point is that, in normal human communication, this physically mediated transmission of experiential content from mind to mind involves subjective value judgments on both ends: as genuine experiencers, we know which sentences are good (poetic, surprising, funny, clear, to the point, ...) and which are bad (boring, nonsensical, unclear, out of context, ...).

One might therefore think that a machine without subjective experience, like an LLM, cannot participate successfully in this language game because it cannot judge the experiential value of the words it reads or writes.

But it somehow happens that all "good" texts with high human value—an almost vanishing subset within the much larger set of all possible texts—have characteristic statistical properties in common that can be learned by an LLM. When the LLM is producing word after word (with a certain degree of randomness) and always stays within the high-value subset, the produced text will likely evoke positive subjective experiences in the reader.

Now, this is clearly a kind of "mimicry," because the LLM is using objective, publicly available statistical properties of word sequences (derived from the training corpus) instead of subjective, private experiences to compose its sentences.

But I suspect that to a certain extent we humans do the same when we speak or write. At least in my personal introspection, the next word I produce (most of the time) just pops up from the "unconscious," without continuous conscious supervision. Could it not be that we have trained our language capabilities to such an extent that it becomes almost an automatic process, like riding a bike? This automatic process might even use learned statistical properties of 'good' sentences in a way similar to an LLM.

And yet, while our unconscious next-word generator is producing text, this evokes a simultaneous stream of subjective experiences in our mind. We genuinely experience our self-generated language and thus we can evaluate it and guide it in a certain desired direction.

I feel the same way when I am improvising jazz on the piano: the musical phrases just flow from the unconscious and are like semi-random samplings from a large learned repertoire. They are automatically fitted into the momentary context of the improvisation. The mind monitors this flow of objective sound, producing a chain of complex emotions that are continuously evaluated. The mind gives occasional feedback to the lower levels in an attempt to optimize pleasure. Sometimes creative "errors" happen, and I play something that I have never played before but which sounds great. I will then try to remember that and make it part of my repertoire of phrases.

So, at least in a superficial way, we may on short timescales rely on learned statistical regularities, but on larger timescales use our subjective experience to guide the micro-process of next-word or next-sound production.

An alternative model of mind along these lines... "The Challenge to AI: Consciousness and Ecological General Intelligence," 2024, DeGruyter.. on Amazon. I think you'd enjoy it...