Big Science or Big Flop? Part-II

Science needs to come from within, not from science CEOs

Admittedly, there were also some successful big science projects. The most notorious one that comes to mind might be the Hubble Space Telescope or the Cassini interplanetary probe to Saturn (but these underlined again the success of unmanned space exploration vs. human space exploration) or that which led to the discovery of gravitational waves that were predicted by Einstein’s theory of general relativity. Another example is CERN, the European Organization for Nuclear Research, which, with its particle accelerators in Geneva, contributed to confirming the standard model of particle physics. But its particle accelerators did not after all lead to a big conceptual change in the theories, they merely confirmed the theoretical predictions that particle physicists had already made. And, apart from a small elite group that can occupy themselves with foundational issues, CERN is mainly a huge industrial-engineering enterprise, where only seldom do novel ideas come into being. Curiously, John Bell, an Irish particle physicist at CERN in the 1960s, mainly concerned with accelerator design, paradoxically made his greatest contribution instead to the foundations of quantum physics. However, he worked on that pursuit during his free time, on weekends.

CERN’s last creation, the world’s biggest particle accelerator, the Large Hadron Collider (LHC), has still to prove itself. So far (as of 2019) it has only discovered something predicted by theory, the Higgs boson. The LHC seems unable to achieve what it was designed for: To find the signature for any new physics, like some hint that could lead us beyond the standard model of particle physics, or a paradigm shift like Einstein’s relativity or quantum mechanics did. Superstrings and supersymmetry are very complex theories that were developed in a concerted effort of most theoretical physics departments all over the world to go beyond this standard model. An exaggerated amount of resources and young minds were devoted to this goal to the detriment of other lines of research and promising developments in physics. This should have led us beyond our present understanding of the world, but experimental evidence seems to cast doubts on that happening, and no new ‘quantum revolution’ is in sight. It seems that Nature didn’t appreciate the human effort to decipher its complexity, and decided that things should work otherwise. The lack of evidence for supersymmetry or anything beyond the standard model of particles is leading to a crisis in physics: link, link. A 'nightmare scenario', as some physicists call it, is rising on the horizon. The nightmare is that probably thousands of physicists have spent the last 30 years running after a chimera.

Anyway, apart from some specific successful cases, history suggests that the excessive focus on the big-science approach we could observe in more than half a century must make way for a more critical view. We can now confidently say that—despite the strong popular media coverage whose hypes always tend to suggest otherwise—in retrospect, facts show how the success and turnout of these huge scientific efforts can be considered quite limited compared to their original expectations. People, especially those who have to pay taxes in times of financial crises, are getting more and more skeptical and nervous about titanic investments into gigantic science projects. And rightly so. It must be said that those responsible for this state of affairs were to a large extent not only politicians, but also great men of science, who were, and continue to be, ready to sacrifice several smaller good science projects in the name of big science. And interestingly, the most valuable results emerging from these mammoth projects were to be found in the field of pure science, not in the applied sciences. This again underlines how unreasonable it is to ask a priori for practical results. Maybe it is time to learn that it is Nature that should guide us to potential applications, instead of us trying to predict them with our limited understanding.

So, should we therefore stop spending money on science projects? No, quite the contrary: we should double our spending on science. And, of course, there are things that will never be achieved without international big-science initiatives. Building a space station, sending humans to the moon and Mars, or building nuclear fusion reactors will still require a joint effort on the part of thousands of specialists working hard in a concerted manner for years, if not decades.

However, we should focus the resources on the right projects, especially in the right manner. And who decides what the right ones are supposed to be? Of course, this will forever remain a subjective point of view. But what about funding several small science projects instead of a single big science one? Does it really make sense to divert all the funds, efforts, and skills of people into the n-th mammoth project? What about spending several smaller amounts of money on many risky projects than huge amounts on mainstream ones? Albert Fert and Peter Grünberg were awarded the Nobel Prize in physics in 2007 for the discovery of Giant Magnetoresistance which allowed the manufacture of modern hard drive GB storing technologies. They developed their technique investing about €5000. Three years later, Andre Geim and Kostya Novoselov received the Nobel Prize for their experiments on the two-dimensional material, graphene. They had extracted single-atom-thick layers from bulk graphite lifting them off with a simple adhesive tape. The curious fact is that in 2013 the European Union launched an ambitious project called the 'Graphene Flagship' investing €1 billion euros over 10 years to push graphene into commercial markets. Already six years later, Terrance Barkan, the executive director of the Graphene Council, noted that the project has not lived up to expectations and embarrassingly admitted: “The main observation I have made over the past six years of its existence is that the real progress in graphene commercialization has come from outside the Graphene Flagship.” What an irony: A billion project is incapable of delivering what a € 5000 experiment could!

Still, paradoxically, it is easier to get millions or billions in funds for conventionally accepted lines of research than a few thousand dollars for small and cheap original projects. It is sometimes almost impossible to obtain as modest a grant as $50,000 for a postdoc, working on a little but novel and original non-traditional line of research, just because it is new (i.e. risky), original (i.e. of uncertain outcome), and non-mainstream (read: it is about giving out money to a ‘black sheep’ who does not bleat with the flock). Statistical and historic records show that the scientific impact per dollar is turning out to be progressively lower for large grant-holders, and that the hypothesis that large grants lead to great discoveries is inconsistent. We should reconsider the current wave of enthusiasm for stratospheric projects. This is not about entirely abolishing big science, which might still be indispensable in some fields, but it is about rediscovering the potential of small enterprises and initiatives, especially that of the individual scientist.

The real point is that despite all these huge investments in science, academic projects, and consistent cultural and scientific promotions, there has been no real paradigm shift. Sure, you will be able to name a lot of great scientists, Nobel laureates, and geniuses who have made groundbreaking discoveries up until our time. But where are the new Copernicus, Keplers, Galileos, Newtons, or Einsteins? It seems that after Einstein the scientific genius has become extinct. It was not big science or huge industrialized and highly organized academic structures that convinced a doctor of canon law, like Nicolaus Copernicus, that the Sun is at the center of the solar system. Heliocentrism was a principle embraced for some very simple observational and personal aesthetic reasons. Big science did not lead to new great paradigm shifts like that of relativity, which was sparked by ‘Mister Nobody’, Albert Einstein, working in a Swiss patent office. No new ‘quantum revolution’ is in sight like that introduced around the beginning of the 20th century by some professor with zero research funds like Max Planck, but who informed the world that he had a crazy idea he himself could hardly believe in: Energy must be absorbed and emitted in discrete quantities, not in a continuous fashion. Nowadays we are looking for the theory which should unify gravity with electromagnetic and nuclear forces. Physicists have been searching for it for the last 70 years, but still there is today no new Planck alleviating their pains. Are the great paradigm shifts, the Copernican revolutions of the past, definitely over? Why don’t we see also today new groundbreaking theories, like that of relativity and quantum mechanics which changed our worldview, considering the great efforts and expenditures of big science? Of course, lots of original ideas are seen today too, but most come from complicated calculations, not from some fundamentally new ‘way of seeing’ the world. New first principles are lacking. Why? How could that be? Shouldn’t a world where science is so central, so much encouraged, and so well-funded, reserve ample space for creative scientific thinkers?

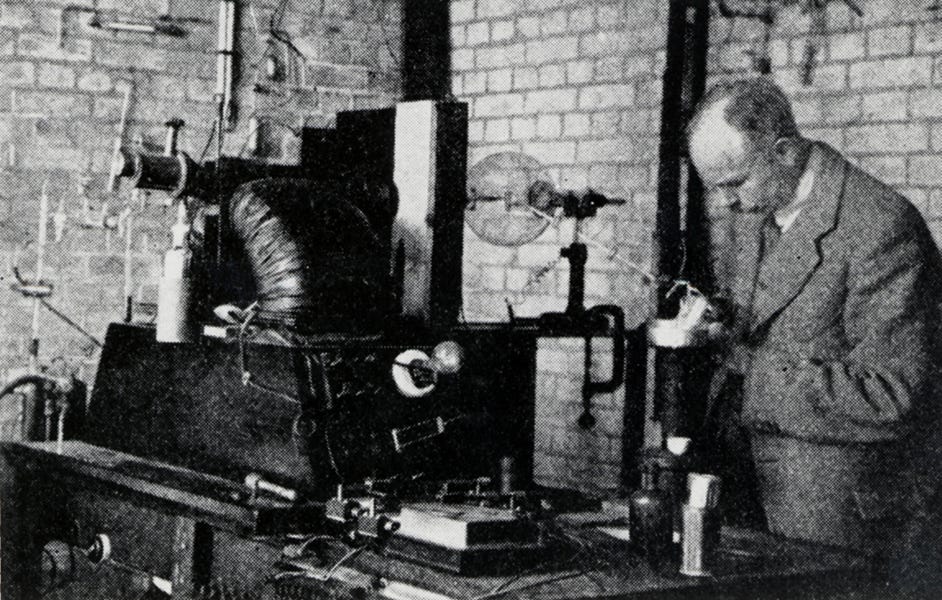

Ernest Rutherford, the physicist, and Nobel laureate who is considered the father of modern nuclear physics, is often cited as being the initiator of modern big science. He led the Cavendish Laboratory in Cambridge, UK, from 1919 until his death in 1937. Under his direction, other researchers and students also made historic discoveries in atomic physics, and many of them received the Nobel Prize too. Yet much of the work in his laboratory used simple, inexpensive devices, and things did not proceed as one would expect in a top research center. A former student of his (and also a Nobel laureate), James Chadwick confessed: ”I did a lot of experiments about which I never said anything. Some of them were quite stupid. I suppose I got that habit or impulse, or whatever you'd like to call it, from Rutherford. He would do some damn silly experiments at times, and we did some together. They were really damned silly. But if we'd gotten a positive result, they wouldn't have been silly.” While Mark Oliphant, another student and co-worker of Rutherford, said: 'Rutherford also lectured on the atom, with great enthusiasm, but not always coherently or well prepared.'

How is it that a laboratory with simple equipment and roughly thirty research students who (more or less secretly) made ‘stupid’ and ‘silly’ experiments, and were lectured by an ‘unprepared’ person, could nevertheless produce an impressive number of Nobel laureates, and rewrite entire chapters of 20th century physics, while so many other big-science projects, funded with billions of dollars and run by thousands of scientists, failed to furnish nearly comparable results? There is a wide range of evidence showing that research effort is rising substantially while research productivity is declining sharply.

The typical objection is that some discoveries will never again come from little projects, and the times of the lonely genius working in the patent office, or the science project led by a bunch of smart but unorganized people, are over. The testing of new theories, and the advancement of science, now need a huge, concentrated financial and human effort that little research laboratories can’t afford. Nowadays, interdisciplinary collaborations of big teams with complex, hi-tech hardware are necessary if we want to discover the secrets of the universe. This is the argument. And, admittedly, there is some truth in that. Without the Hubble Space Telescope and the large colliders, our understanding of the universe would not have progressed in some sectors of fundamental science. But a verdict that the times of the intuitive, independent, and original thinkers (and, yes, also those of the banned ‘lonely thinker’) are over has no grounds. They must inevitably come back, since creativity and curiosity are intrinsic to human nature, and form part of an inner expression of the Homo sapiens sapiens, and are not something that comes and goes.

Behind these gigantic technological efforts stand, first and foremost, commercial interests. However, there also stand unjustified, and to some extent irrational, beliefs and hopes, sometimes strongly promoted by quite smart intellectuals. Such beliefs include the notion that these technologies will project us towards a future society in which everything is completely automated and regulated by some futuristic AI and computer technology that will do all the work for us. The most optimistic scenario foresees humanoid robots which will take up all our jobs and our physical and intellectual activities, allowing the human species to lean back and enjoy a life-long vacation. This wishful thinking is, however, contradicted by the last two centuries of technological evolution. The trend towards replacing human labor with automatized machinery killed jobs; it also created new necessities and consumer desires which quickly demanded new skills and competencies that were formerly unknown or unnecessary. These illusions are not new. Already in 1930, the British economist John Maynard Keynes predicted that, due to extensive industrialization and economic and technological progress, by the time his grandchildren had grown up, we would be working only 15 hours a week. This was not only a much too optimistic projection, as a 40-hour week remains a normal state of affairs, but, almost a century later, the contrary tendency is quite evident. A workweek that is 50 or 60 hours in length is no longer something that burdens only managers or slaves. People who experience so much stress that they develop burnout syndrome aren’t rarities, either. The bottom line is that the progress of science or big science, technology, and the economy alone won’t lead us to a better and more relaxed society if we do not also grow something inside us that progresses in parallel with the outward conditions.

(Re-)Discovering Creative Thinking

The point is that we are living in times where the creative thinker, guided by an inner intrinsic motivation, is simply de-selected by the system a priori. The problem is that science has become too ‘central’, in the sense that it is too centralized, and industrialized. Big science has become also a big enterprise with a big army, organized according to a managerial top-down hierarchy of subordinate and obedient employees who are pressured continuously by deadlines assigned from the top, which tells them what to do, how to do it, and when to do it. A system that officially tells us to encourage independent thinking, while the truth goes in the exact opposite direction. In its intrinsic structure and organization, it is incapable of leaving much space, if any, for the personal and spontaneous development of the genius. It should have been clear since the beginning that it couldn’t deliver the promises it made. It is time to rethink all that from the ground up.

We frequently hear people talking about the autonomy and freedom of science. But, in this regard, most research centers of today are the problem, not the solution. They look exclusively at the speed and precision of intellectual reproduction and potential for manufacture, hopefully with lots of papers published, and possibly added with good communication skills of the individual in order to keep high the image and prestige of the group or department. But motivation (intrinsic or extrinsic) is officially seen as secondary. A free-progress environment is necessary because there are several young students, or potential students, who feel the inner drive to explore the deeper meaning of things, who have an open and curious mind toward alternative approaches, who have great inspirations and aspirations that could serve the collective development of a nation, or even humanity as a whole. But, when they enroll in a college, they discover that there is no such thing as an opportunity to express themselves. Individual development is hampered. They are forced to repress their own inner potentialities and are compelled to follow lines that are not their own. If they want to make a career, they have no other way out than sacrificing all to a study and professional path which has nothing to do with what their inner soul is longing for and with what their real destiny should be.

Many of these individuals are led to believe that there is something wrong with them, fall prey to depression and stress, and finally abandon entirely the studies they had pursued for several years. Nowadays, those who perceive an urge to go beyond a mere analytical and superficial understanding of the physical world, those who want to focus on specific subjects because there is an inner drive to do so, must set aside these yearnings. They would like to progress and change and evolve, but are forced to inhibit and even suppress their own evolution.

Nowadays everyone is talking about ‘excellence’. But what is excellence? Setting up highly selective institutions that bring together the ‘best brains’, and order them to do what is required from the top, like chickens in a henhouse? Every manager would deny this, and all unanimously would tell us that they look for creative and original thinkers. Facts on the ground are quite different. The typical modern managerial mindset appeals to more creative thinking and originality in schools but does not allow it in its own entrepreneurial environment. Working under pressure and multitasking is the motto and main pedagogical ideal of several top managers and academics, who continue to ignore the basic facts emerging from psychology and brain research which clearly tell us that this is the most counterproductive approach. This is a pedagogy that tells the what, when, and how of the job. Every attempt to put forward one’s own ideas, projects or alternative approaches is seen as an irritating attempt to overthrow authority. Obviously, those in charge are always a bit disappointed that, despite having under their grip several people who eventually publish lots of scientific papers, still not many groundbreaking ideas emerge. There is a pressure that generates fear, anger, sadness, and frustration, and ultimately hampers the emergence of a further consciousness in most individuals studying and working in industries or schools, universities, and research centers. And, if things continue to go wrong, the pressure is enhanced. But it should become clear instead that the solution is not to persist in doing more wrong things. An entirely new approach is needed. Real excellence can come only from within. This awareness remains as alien to most teachers, academic figures, and managers today as it did in the past.